Interview with Alessandro Ludovico for Neural Magazine nr 52: Complexity Issue(s).

1. What’s your definition of “complexity”?

Complexity without a context means nothing to me.

2. In your seminal “Glitch Studies Manifesto” you wrote: “This [computer] system consists of layers of obfuscated protocols that find their origin in ideologies, economies, political hierarchies and social conventions, which are subsequently operated by different actors.” Do you think that today we are able to write about computation as a history of power relations in a similar to how classical historians have done to history?

Back when I was in high school I was taught that history was printed on dead trees (in history books). A compressed version of history graced the back wall of our school classroom, where it was drawn up as a line, interrupted by dots that signified important moments. How modern it was, history drawn out like a stacked conveyer belt of causes and facts! I have learned to look beyond that dogma of mono-history and realize that to any tail there is a collection of accounts, trails and perspectives. History is not something linear, some orchestrated scheme in which one push results into action.. This is not Der Lauf der Dinge (1987)! The histories of computing are multithreaded and exist on different clocks, which not only run between kairos and chronos but also in high frequency and deep time. There are only arbitrary beginnings from which we can descent into these coves: from the woven abysses of protocols we can wade through the non linear meshes of informed materials and enter the diverse tides of frameworks and standards. But let us not get trapped into these currents of ever eroding systems. What I am trying to say is: writing a history (of computing) is a form of conflating complexity. She who takes on the role of Janus and sets out to resolve a future history from the relationships between actions, ideologies and hierarchies and he who weaves these threads into a kludgy plot and calls it ‘history’, should also take the responsibility to create a sandbox in which we can all build and test alternatives.

Rosa Menkman, Vernacular of File Formats. AVI Cinepak, 256 Grays, 2011

Rosa Menkman, Vernacular of File Formats. AVI Cinepak, 256 Grays, 20113. Do you think that historically the “Vernacular of File Formats” has been the most stable and understandable digital construct (the “grammar”). Is it maybe even a digital construct onto which we can build an alternative discourse?

I know of quite a few digital compressions that have become obsolete, unsupported or in another sense ‘unstable’ within the last few years, so it is a fallacy to refer to these digital constructs as ‘stable’. AVI Cinepak for instance, a lossy video codec developed as part of Quicktimes' video suite in 1991 and later implemented in game consoles such as the Atari Jaguar, has a setting that lets you compress video in 8bit grayscale (256 Grays). But when I use this setting in Quicktime 7, the video will playback as if it were encoded within a very odd 8bit color spectrum. This unstable color encoding became even more exciting when I tried playing back my compressed video in certain older iterations of the VLC player, which appoints different color palettes on every replay, and cycling through them arbitrarily.

Much more than we generally realize, image compressions (data organizations that possess their own artifacts or “aesthetic Vernaculars”) exist within a specific time and environment.

I believe that data should be understood as a material that has the potential to be fluid. Hardwares, softwares and platforms can leave traces upon the data they syphon. The string 010100110100111101010011 for instance, can be transcoded into anything, depending on the softwares that process it. The architectures through which we approach this string transform not only themselves (softwares get upgraded and support gets dropped) but also the string by leaving traces in its metadata (informing it) or by converting it to a ‘better’ organization. This is actually very meaningful and can easily get lost when thinking about image file formats as ‘stable'. Thinking about syphoning data is important, especially as critique to the constricting and exclusive architectures the platforms are creating. I am just softly poking for a rheology of data here. Rheology, a term hailing from mechanics, is often used to describe the flow of matter, primarily in a liquid state, but also as ‘soft solids’, or solids that respond as non solid plastic. So the rheology of data would entail a study of the fluidity of data, or how we can keep things leaking and unstable.

4. Recently you told me “I propagate an awareness of the resolution”. Can you elaborate more on that?

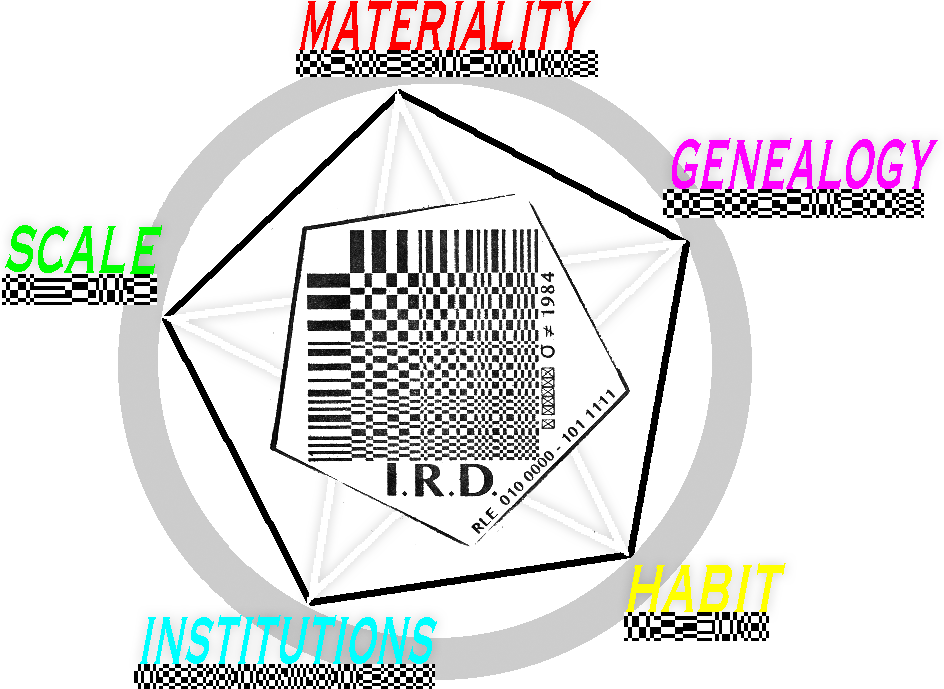

The meaning of the word resolution depends greatly on the context in which it is used. The Oxford dictionary for instance, lists definitions from within the discourses of music, medicine, chemistry, physics and optics. What is striking about this list is that at first glance some of these definitions read contradictory; while in chemistry resolution may mean the breaking down of a complex issue or material into smaller pieces, mathematics uses the term resolution to refer to the answer that solves a particular problem. The Oxford Dictionary ends its list of definitions with: “the degree of detail visible in a photographic or television image.” While this definition of the term resolution is the closest to a definition of resolution within the realm of the digital, it also conflates the actual processes and dimensions of a resolution to a simple and standardized quantitative value. After taking resolution to its etymological roots, it becomes possible to understand resolution as a double act: resolution stems from the Latin word resolvere, which can be split up in ‘re’ (back) and ‘solvere’ (too loosen up). Resolution thus combines the understanding of what you can see, and the ‘loosening up’ of the individual actors that play a role in constructing this visibility. In this sense, resolution may also refer to not only what you can see (the amount of pixels) but also to what actors play a role in making something visible, and maybe, in a sense also the inherent compromises between these different actors.

If we accept this expanded definition of resolution, the term can also be used to refer to a space of compromise between different actors (objects, materialities, and protocols) in dispute over norms (frame rate, number of pixels etc.). I think this is an important step into realizing that resolutions are non-neutral, standard settings, that involve political, economical, technological and cultural values and ideologies, embedded in the genealogies and ecologies of our media. In an uncompromising fashion, quality (fidelity) speed (governed by efficiency) volume (generally encapsulated in tiny-ness for hardware and big when it comes to data) and profit (economic or ownership) have been responsible for plotting them. I would like to add that while I am not against resolutions and the functionalities that plot them, I do think it is important to demonstrate that there is more to the conflated understanding of resolution as a quality measured solely through quantity.

Image: Rosa Menkman, iRD Logo, 2015

Image: Rosa Menkman, iRD Logo, 20155. In your recent research you’ve founded a virtual institution called ‘institutions of Resolution Disputes/iRD” using ambiguity as a strategic tool to trigger further investigations. Does the iRD also reflect on the historical role of institutions to mediate complexity? And what you’d like your institution to do?

I started the iRD in March 2015, just after I was fired from my favorite institution. I did not see it coming, it was over some silly paperwork and I am still baffled that this happened the way it did, without support or after care. This was my first job with a desk inside an institute (which at the time sounded appealing). I had moved back from London to Amsterdam to work for this institute, so when I was let go 2 days before the start of my contract I was without a job, with too much time and in a state of disillusionment.

The iRD is actually not one institute, but 5 institutions, each following their own logic formulated within the same framework: which is a text in DCT, an encryption method I developed for a Crypto Design Challenge (a challenge that was ironically enough organized by the same institute that had let me go), which subsquently won first prize. These are institutions that anybody can use or call on when in need for an institute (for instance when they need to write an application).

The iRD are a covert criticism to what I feel is as a defective culture within institutions. The role of an Institution should be the constructive mediation between function and action of entities and human actors. But it has become clear to me that instead of using the power of an institution constructively, these institutions have become a facade for people to hide behind. In the end, institutions are just social constructions so why do they not favor quality over output?

I don’t believe that Institutions are bad, but the people running these institutional frameworks should keep in mind that these so called institutions are social constructs and that not every rule in their institution always results in a positive outcome. Finally, while the people in charge have a responsibility to apply the rules, they also need to be able to bend them. Institutions and their leaders have an ethical responsibility to be there for the humans, not for the rules.

6. As the pervasiveness of industrial digital paradigms is slowly affecting “objects,” what kind of computation you’d like them to perform, instead of being chained to categories like “efficiency and functionality”?

I would like technologies to be more open and modular.

7. If we consider “error” as a manifestation of a still unknown layer of knowledge, can we adventure, in your opinion, in interpreting it as a real gateway towards a still un-decoded understanding and not as a temporary disfunction? How can that relate to your concept of “magic in the machine”?

Error, or a lack of legibility, can also exist by the grace of a lack of literacy from the reader. The idea of an actual system behind the noise is a form of chaos magic; my perspective determines my future system. But someone with similar yet slightly different perspective will definitely not have the same future as I will have.

Unfortunately, most of the syphoning done by the ’chaos magicians’ within the digital focuses primarily on aesthetic research, rather than applying chaos magic as a critical perspective that can help us to circumvent or expand of the rules of the platforms implicated. They could also question other important subjects like security, freedom of information or socio-political issues connected to free data.

The mysteries that this type of aesthetic alchemy sparks, can be useful in the battle against standardization, however, when the same or similar ‘experiments’ are done time after time, these experiments change from meaningless objects full of kairos to meaningful utterances in themselves.

This is how a Vernacular of File Formats becomes regressive and even obfuscates the fluidity of data. Today, 9 out of 10 Hollywood movies show the voice of artificial intelligence as disturbances in blocks of data; in a way, artificial intelligence organizes itself in clusters beyond human intelligence. When aliens invade the world, the noise of their signals often show as lines — referring to the fact that they are not necessarily smarter, but just communicating on another frequency, and the humans will have the potential to outsmart them in the end. Finally, ghosts still only communicate through analogue forms of noise. We have come to a point where even instabilities are meaningful and slowly ossify within a folksonomy of noise affect, indexing alternative forms of legibility.

8. Do you think that at some point in the near future the negotiations of signs will transcend our current layers of understanding and get closer to the computational ones?

The ISO (since 1947) is in a sense exemplary of what Orwell described in 1949 as INGSOCs and its Newspeak. We come from an era of standardization. But this was just a beginning. The standards today are not set by institutions but by platforms. They are not de facto, but they are de jure and they are not enforced by penalty, but through exclusion and deletion.

If that sounds transcendental, all the more power to you!

This new standardization of signs is not there to serve the individual, but to serve the platform, which is a closed entity. It pretends to become more and more “user friendly”, but in reality it takes the freedom of the individual and transform its utterances into effective platform speak. If that sounds transcendental, all the more power to you, but to me the freedom lies within the increments, and the dismantling of the resolutions.

No comments:

Post a Comment